Runtime performance management and spawn cycling

So now that we’ve got things in the scene, how do we deal with active AI? We know they need to cycle in and out as they become less relevant to the player. We know that things far off in the distance, especially things out of sight, don’t need to be doing their full behavior. We really know things off screen can be prioritized for removal. All of that ended up going through an implementation of the UE4 significance manager.

As a starting point, here’s a clip of the radar as I swim across a region.

Even in this short clip, there’s a multi colored lightshow of AI going on, a lot of spawning and despawning going on, and even some different sizes going on. To start breaking this down I’ll jump right into significance management and what it meant for the game.

At it’s core, significance management is simple – you provide a way to score an actor, then significance management gives you a sorted list of those actors within a category. How you score the actor is up to you, and what that list means is up to you. For Maneater, I basically broke this into a few considerations:

- Scoring is just a means to an end. It gives me the list of actors in a sorted priority list. Lowest scores are the things most relevant to the player.

- Once the list is sorted, I can break those into buckets where each bucket represents a new level of overall behavior degradation (I’ll get into this a bit more in a bit).

- Each bucket has a fixed size, and the overall system has a fixed size. If something causes us to overflow the total size, the least relevant actors start being force despawned.

- Importantly, these buckets can be tuned on a per-platform basis, so higher end platforms can have more active AI or more full-feature AI running at once than slower platforms, giving us some easy knobs to use to tune performance.

- For scoring, I have two basic metrics – distance from player and whether or not they are forward/behind in dot product. Different classes have various modifications on the final score (ex: higher priority AI controllers can mod their score down to reduce despawn chances), but we still have the same root scoring calculation.

- At a fixed max significance, actors will also be force despawned regardless of the system limits.

- Like spawn point filtering, I do this largely on the game thread because there just isn’t enough data to make it worth doing additional worker threads. There’s a threaded sort once the list is completely scored, but the stuff on the game thread was significantly under a millisecond for the actual scoring process.

- The more expensive part of the whole process is physics state changes that happen based on spawning/despawning, but that stuff can’t particularly be threaded, and having the state changes happen allows for better overall performance anyway. They also typically don’t happen on most frames.

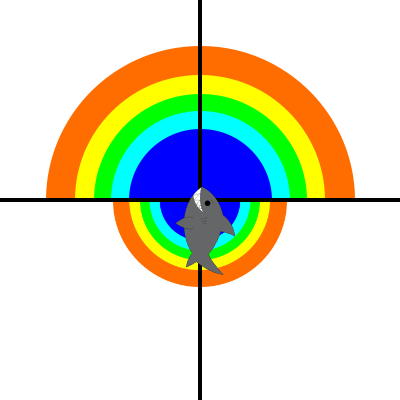

With the basic calculation I get a rough area shape something like this (also, totally not to scale):

As you can see, this significantly prioritizes things in the direction of player movement to stick around, and significantly deprioritizes things behind the player to despawn. Each color band is another bucket deeper in the system, and another set of things that happens to reduce the performance impact of an actor in that band. Outside of the color bands, things are forcibly despawned. If we look at the buckets in place, roughly the following happens:

- Bucket 0: Full performance bucket, all logic on, full tick rate, full animation rate

- Bucket 1…n-1: Tick rate reduction for each bucket higher in the system. At the time that I left Tripwire, this was at 0.04 seconds per bucket on PC, Xbox One, and PS4. Switch was much more aggressive at 0.15 seconds per bucket reduction, though I expect that value to be able to be lowered by the time they ship. Tick rate reduction applied to the AI controller, pawn, behavior tree, and animation.

- At a configurable specified bucket, navigation is completely turned off, but other AI systems are maintained on the drastically reduced tick rate.

- In the last bucket, the actors are put into full dormancy until they either despawn or become more relevant to the player.

While this ends up giving us a ton of game thread time back each frame, it also introduces a high rate of spawn churn. Spawns on slow platforms were sometimes hitting 40-50ms for a pawn/controller pair. While some of that could definitely be optimized away, I needed a bit more help to achieve that, and went with a spawn pool to manage these actors.

I won’t go into detail on the spawn pool since it’s not a complicated thing. Instead of spawning an actor, look in the pool to grab one thrown into stasis. Instead of despawning an actor, kill its tick and throw it into stasis way outside the playable area. If there’s anything I can really draw as a conclusion here, it’s do this as early as is possible, then do it even earlier. Adapting a game that has its entire actor initialization system built around new actors to something that has recycled actors was a pain in the ass. It was just a pile of bugs of systems that needed to be reset or reinitialized or cleared. While all those things are not free (heck, physics resets were a large part of our respawn time and mostly needed to happen), I was seeing 60%+ reduction in spawn costs by moving over to a pooled system.